Balancing Innovation and Control

Managing LLM Risk in Financial Services

Large Language Models (LLMs) such as ChatGPT, Copilot, and Gemini represent a generational leap in enterprise productivity. They can accelerate software development, automate report writing, improve customer support, and drive operational insights at unprecedented speed. For financial institutions—where compliance, precision, and trust define the brand—these tools promise measurable efficiency gains.

Yet the very capability that makes LLMs powerful—their ability to understand, generate, and learn from human-like language—also introduces profound data-security, regulatory, and reputational risks.

Every prompt entered into a public LLM is, effectively, an outbound data transmission to an uncontrolled external system. When employees paste internal reports, source code, or personally identifiable information (PII) into such tools, the bank’s confidential data may leave its perimeter. In heavily regulated environments (e.g., GDPR, CBUAE Consumer Protection Regulations, PCI DSS, or APRA CPS 234), such actions constitute data breaches, even if unintentional.

This paper explores the tension between innovation and control, outlines the risk vectors unique to LLMs, and provides a pragmatic framework for adopting secure generative-AI capabilities within a banking environment. It also highlights the role of emerging technologies such as AI Firewalls—including solutions from vendors like Contextul.io—in enabling safe, policy-compliant LLM usage without stifling innovation.

1. The Promise of LLMs in Financial Services

1.1 The efficiency imperative

Banking is an information-heavy industry. The ability to summarise, classify, and generate language-based artefacts has immediate applications:

· Software Engineering: Code completion, test generation, and documentation.

· Operations: Drafting policies, procedures, and audit responses.

· Risk & Compliance: Automating control narratives, mapping regulations to internal frameworks.

· Customer Service: Conversational chatbots capable of natural, context-aware responses.

· Data Analytics: Querying structured and unstructured data with natural language prompts.

McKinsey estimates that generative AI could add $200–$340 billion in annual value to the banking sector globally, primarily through productivity gains and faster time-to-market for digital initiatives.

In short, LLMs are no longer a curiosity; they are becoming an enterprise necessity. The challenge is to unlock this capability without creating new vectors of regulatory or reputational exposure.

2. The Problem: Shadow AI and Uncontrolled Data Egress

2.1 How risk manifests

Despite internal bans, employees across most large banks already experiment with ChatGPT, Bard, and Copilot. They use them to write meeting notes, refine documentation, or even debug code. This unregulated usage—Shadow AI—arises from good intentions: people simply want to work faster.

But every prompt is a potential data-loss event. Consider:

· A relationship manager pastes a client pitch deck to “make it sound more professional.”

· A financial controller asks ChatGPT to rephrase internal earnings commentary.

· A developer uploads production logs for debugging assistance.

· A compliance officer asks an LLM to summarise a Suspicious Activity Report (SAR).

In each case, proprietary or regulated data is transmitted to a public cloud endpoint outside the bank’s control, possibly stored or used for model retraining. Even when vendors claim not to retain prompts, assurance cannot be independently verified.

2.2 Regulatory and contractual implications

Such actions may breach:

· Data Protection Laws (GDPR, DIFC DP Law, PDPL in KSA, etc.) – particularly regarding data transfer and purpose limitation.

· Banking Secrecy Laws – prohibiting disclosure of client financial data.

· Internal Outsourcing Frameworks – since generative AI services constitute unapproved data processors.

· Third-party contractual obligations – e.g., non-disclosure agreements, embargoed financial results, etc.

Supervisory authorities (e.g., European Central Bank, CBUAE, PRA, APRA) have already emphasised that AI usage must comply with existing risk frameworks for outsourcing, operational resilience, and data protection. “Innovation” does not exempt compliance.

2.3 The organisational blind spot

The majority of banks lack visibility into how employees interact with LLMs. Traditional Data Loss Prevention (DLP) and CASB tools are insufficient: they detect file movements and URLs, not prompt content or semantic risk. The result is an expanding “blind zone” where human creativity intersects with unmonitored AI interactions—an unacceptable position for regulated financial institutions.

3. Understanding the Risk Landscape

4. Why a Blanket Ban Doesn’t Work

Many banks have simply banned the use of public LLMs, blocking access at the firewall or proxy level. While this seems prudent, it produces three side effects:

1. Innovation flight: High-performing staff adopt personal devices or networks to bypass restrictions.

2. Talent frustration: Younger, digital-native employees perceive the organisation as outdated or bureaucratic.

3. Missed opportunity: Competing institutions that adopt controlled AI gain material efficiency advantages.

In practice, a ban creates risk displacement, not risk reduction. What is needed is a controlled adoption framework—a way to enable AI safely, visibly, and compliantly.

5. Designing a Secure LLM Framework for Banks

5.1 Guiding principles

A resilient approach should rest on five principles:

1. Visibility: Know who is using what, and for what purpose.

2. Control: Enforce policy boundaries at the prompt level.

3. Containment: Prevent sensitive data from leaving the perimeter.

4. Transparency: Log and audit all AI interactions.

5. Enablement: Provide safe, sanctioned alternatives that actually work.

5.2 Key components

(a) AI Governance Framework

· Policy: Define acceptable use, data classification boundaries, and prohibited data types for AI systems.

· Roles: Appoint an AI Risk Officer and cross-functional AI Review Board (CISO, Legal, Data Protection, Model Risk, Audit).

· Lifecycle Management: Govern AI use like any other critical application—registration, risk assessment, monitoring, decommissioning.

· Training: Educate employees on what is safe to share, and why controls exist.

(b) Technical Enforcement Layer – the “AI Firewall”

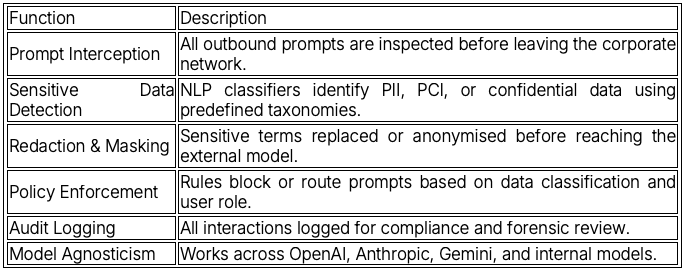

An AI Firewall—such as that developed by Contextul.io or similar vendors—acts as a policy-enforcing gateway between users and external LLMs. It monitors, classifies, and sanitises prompts and responses in real time.

Capabilities include:

Effectively, this creates a controlled conduit—allowing productivity gains while ensuring regulatory compliance.

(c) Private or Hosted LLMs

Banks with advanced data platforms may deploy private LLM instances—either open-source (e.g., Llama, Mistral) or licensed proprietary models—within secure environments.

· Deploy within VPC or on-premises infrastructure.

· Fine-tune on internal documentation using approved datasets.

· Apply data classification filters before ingestion.

· Integrate with Identity & Access Management (IAM), DLP, and Security Information & Event Management (SIEM) systems.

· Support federated queries—so that internal LLMs can leverage approved external models via the AI Firewall.

(d) Data Loss Prevention (DLP) Enhancement

Augment traditional DLP with semantic detection and contextual classification—recognising phrases, entities, or patterns that indicate risk even when data isn’t exact-match (e.g., “account number for client in Bahrain”). Modern AI Firewalls integrate directly with these tools.

(e) Third-Party Risk Controls

Treat LLM providers as critical suppliers:

· Conduct due diligence (SOC2, ISO27001, CSA STAR).

· Require data residency transparency and model retraining opt-outs.

· Insert contractual controls: data deletion SLAs, audit rights, incident notification, and jurisdictional compliance (e.g., GCC data localisation).

6. Implementation Roadmap

7. Case Study Snapshot (Illustrative)

Bank A (Global Tier 1)

Challenge: Shadow use of ChatGPT by 12,000 staff, causing regulatory concern.

Action: Deployed AI Firewall integrating with Microsoft Copilot, OpenAI API, and internal policy engine.

Outcome:

· 40% of previously blocked queries now safely processed via sanitisation.

· Zero data-loss events post-deployment.

· Employee satisfaction up 25% due to safe enablement instead of outright bans.

· Positive audit finding from internal risk committee.

8. Measuring Success

Key performance indicators for secure LLM adoption include:

· Reduction in unsanctioned AI traffic (measured via proxy logs).

· Prompt policy compliance rate (approved vs blocked prompts).

· Incident volume (data leakage attempts detected and remediated).

· User enablement metrics (adoption of sanctioned AI tools).

· Time-to-approve new AI use cases (indicator of governance maturity).

Banks that treat AI enablement as a measurable operational capability—not just a policy—gain both control and agility.

9. Recommendations

For CISOs and CTOs

1.. Acknowledge inevitability: Generative AI is not optional; it will permeate workflows. The question is not if but how safely.

2. Shift from prohibition to protection: Move beyond bans. Build controlled enablement with auditable guardrails.

3. Deploy an AI Firewall: Solutions like Contextul.io offer immediate, low-friction visibility and control over AI interactions.

4. Develop a unified AI policy: Align Legal, Compliance, Risk, and Technology. Clearly delineate responsibilities.

5. Integrate with enterprise security stack: Extend DLP, IAM, and SIEM to cover prompt-level telemetry.

6. Educate continuously: Provide mandatory training for all staff on AI risk, confidentiality, and safe usage.

7. Plan for incident response: Define escalation, forensic logging, and notification pathways for AI-related data incidents.

8.. Adopt privacy-by-design: Embed anonymisation, data minimisation, and consent logic into all LLM integrations.

9. Engage regulators early: Proactively disclose AI risk frameworks to supervisors—show governance maturity, not secrecy.

10. Pilot, measure, iterate: Start small, prove value, then scale with confidence.

10. The Road Ahead: AI Governance as a Competitive Advantage

In the near future, regulators will expect every major bank to demonstrate AI risk governance equivalent to existing operational resilience standards. Supervisory inspections will ask:

· Where are AI models used in production?

· How is data protected before, during, and after prompt submission?

· What independent assurance exists for AI vendors?

· How are decisions validated for bias or explainability?

Institutions that prepare now—embedding technical controls and governance discipline—will be positioned to leverage generative AI safely and faster than their peers.

Conversely, those that continue with blanket prohibitions will watch innovation move elsewhere. The competitive gap will widen, not just in cost efficiency but in culture, agility, and digital reputation.

11. Conclusion

Generative AI and LLMs offer the banking sector a profound opportunity to enhance productivity, automate complex processes, and personalise customer experience. But with that opportunity comes the duty to protect the institution’s most valuable asset: its data.

As CTOs and CISOs, our role is to create a bridge between innovation and assurance—to enable creativity without compromising compliance. The practical path forward lies not in restriction but in intelligent enablement: controlled access, monitored usage, and proactive governance.

Solutions such as AI Firewalls (e.g., Contextul.io) provide the technological foundation. Strong policies, disciplined culture, and leadership commitment complete the framework.

The banks that master this balance will not only avoid breaches—they will define the new standard for responsible, high-velocity innovation in financial services.